Large language models are no longer experimental assets that are restricted to innovation labs. For enterprises and strong startups, they are becoming foundational components of customer platforms, internal systems and data-driven decision workflows. However, there is much more required for production-grade adoption than connecting an API to a chatbot interface. Enterprise-ready custom LLM solutions rely on a well-thought-out technical architecture that balances performance, security, scalability, and cost management.

This article deconstructs the underlying architectural layers that are behind enterprise implementations, and explains how mature LLM Development Services organize systems that are designed for real operational impact, not short-term demonstrations.

Why Architecture Matters in Enterprise LLM Adoption

It is common for decision-makers to be interested first in model capability. While model selection is important, architecture is the part that determines if a solution can scale across departments, comply with governance standards, and integrate into existing technology ecosystems.

An enterprise-grade approach to Custom LLM Development fulfills challenges such as data isolation, latency at scale, auditability, and long-term cost efficiency. According to recent studies of enterprise AI adoption, most LLM initiatives do not get beyond the pilot phase because of architectural constraints, not model quality.

This is where experienced LLM Consulting Services are critical. Architecture choices made during the early stages can either speed the adoption or silently stymie it months later.

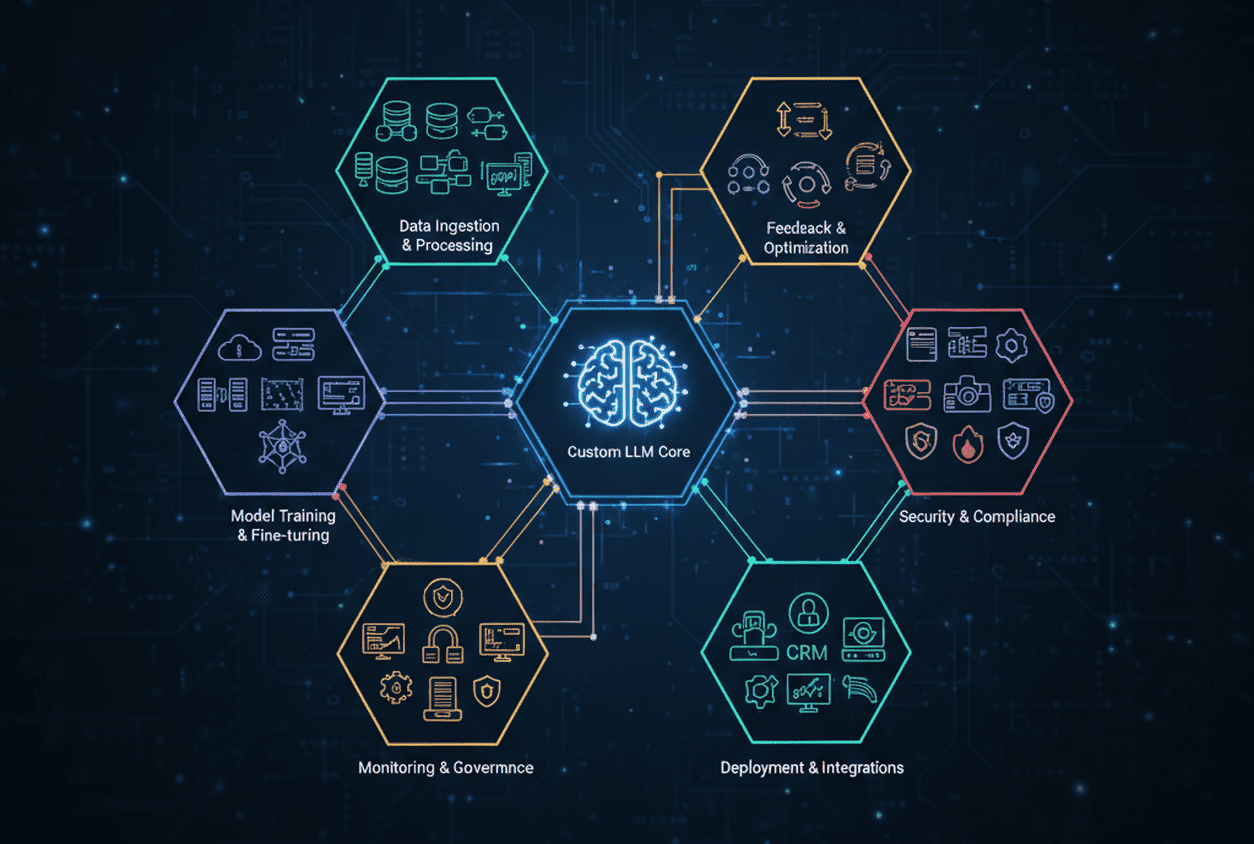

Core Layers of an Enterprise LLM Architecture

1. Data Foundation and Knowledge Layer

At the very foundation of any custom LLM solution is the enterprise data layer. This includes structured data (such as databases), unstructured data (such as documents and tickets), and semi-structured data (such as logs or emails).

Enterprise architectures never transfer raw data to their models. Instead, they are based on a governed data pipeline that includes:

- Secure ingesting and classifying

- The semantic retrieval of the vector database

- Access control and traceability using metadata tagging

Retrieval-augmented generation has become the dominant approach here. Instead of retraining models with each update, the system brings up relevant enterprise knowledge at inference time. This improves accuracy without losing data separation, which is required for most regulated industries.

A good LLM Development Company designs this layer so it can accommodate changing data volumes without re-architecturing the entire system.

2. Model Strategy and Deployment Layer

Enterprise systems very rarely rely on a singular model. Production environments frequently contain a mix of foundation models, fine tuned variants and task specific models that are optimized for cost or speed.

Key architectural considerations include:

- Model abstraction layers that enable the switching of providers

- Support for fine-tuning/adapter-based training

- Flexibility of deployment in cloud, hybrid or private infrastructure

This abstraction insulates enterprises from vendor lock-in while enabling experimentation as the model landscape evolves. Advanced LLM Development Services also implements routing logic that dynamically distributes workloads across different models based on complexity or sensitivity.

3. Prompt Engineering and Orchestration Layer

Prompts are not static inputs in enterprise environments. They are adapted to the use case, user role, and context. Mature systems view prompt management as a versioned, testable asset.

The orchestration layer manages:

- Governance-controlled prompt templates

- Multi-step reasoning workflows

- Tool Calling and API Chaining

For example, an LLM-powered compliance assistant may be able to retrieve policies, analyze transaction data and generate explanations in a controlled sequence. This structured orchestration is what sets enterprise LLM-powered solutions apart from consumer chat interfaces.

4. Security, Privacy, and Governance Layer

Security is the most common barrier to enterprise LLMs. Architecture has to address data exposure risks without compromising usability.

Enterprise-ready systems implement:

- Role-based access controls

- Data masking and redaction

- Audit Logging and Traceability

- Modeling interaction monitoring

This layer guarantees that no sensitive data is ever outside approved boundaries (even using third-party models).

LLM Integration Services often focus on this layer to integrate AI systems with existing security systems in enterprises.

5. Integration and API Layer

Rarely are LLMs operated as standalone tools. Their value lies in bringing intelligence to existing systems [CRM platforms, ERP workflows, or internal dashboards].

The integration layer provides:

- Stable APIs for internal and external consumers

- Event-driven Triggers Linked to Business Workflows

- Compatibility with legacy systems

This is where custom LLM solutions become assets for operations and not experimental features. A properly designed integration layer enables enterprises to deploy LLM-powered capabilities incrementally across teams.

6. Observability, Cost Control, and Optimization Layer

Without visibility, LLM initiatives quickly become an expensive proposition and difficult to justify. Enterprise architectures include monitoring systems to track usage, latency, response quality, and cost per interaction.

Key metrics often include:

- Token consumption per department or feature

- Response Accuracy and Fallback Rates

- Infrastructure utilization

This data supports continuous optimization cycles, enabling organizations to revise prompts, adjust model selection, and optimize ROI. Strong LLM Consulting Services approach observability like a core part of the architecture, not an afterthought.

Architectural Patterns for Common Enterprise Use Cases

Different problems in business require different emphases in architecture:

- Internal knowledge systems have as their priorities retrieval accuracy and access control

- Customer-facing applications – latency, scalability, and brand-safe outputs

- Decision support systems need to be traceable and explainable

In both cases, the same basic architecture adapts itself through configuration, rather than through reinvention. This modularity is what makes it possible for enterprises to scale LLM-powered solutions across multiple use cases.

The Role of a Strategic LLM Development Partner

Building this architecture in-house means deep expertise in AI engineering, cloud infrastructure, and enterprise security. Many organizations prefer working with a specialized LLM Development Company to implement it quickly without dealing with architectural debt.

A good partner does more than make models. They design systems that work with existing technology stacks, align with governance requirements, and evolve as business needs change. This approach helps reduce long term costs and increases adoption in the organization.

Final Thoughts

Enterprise-ready custom LLM solutions are determined by architecture, not hype. Data foundations, system integration, governance, and observability from the beginning are the areas where the most successful implementations are found.

For decision-makers, the question is no longer whether or not LLMs can deliver value. The real question is whether or not the underlying architecture is built to support scale, security and measurable business outcomes. Organizations that make early investments in strong technical design are the ones making LLM-powered solutions long-lasting competitive advantages, not fleeting experiments.