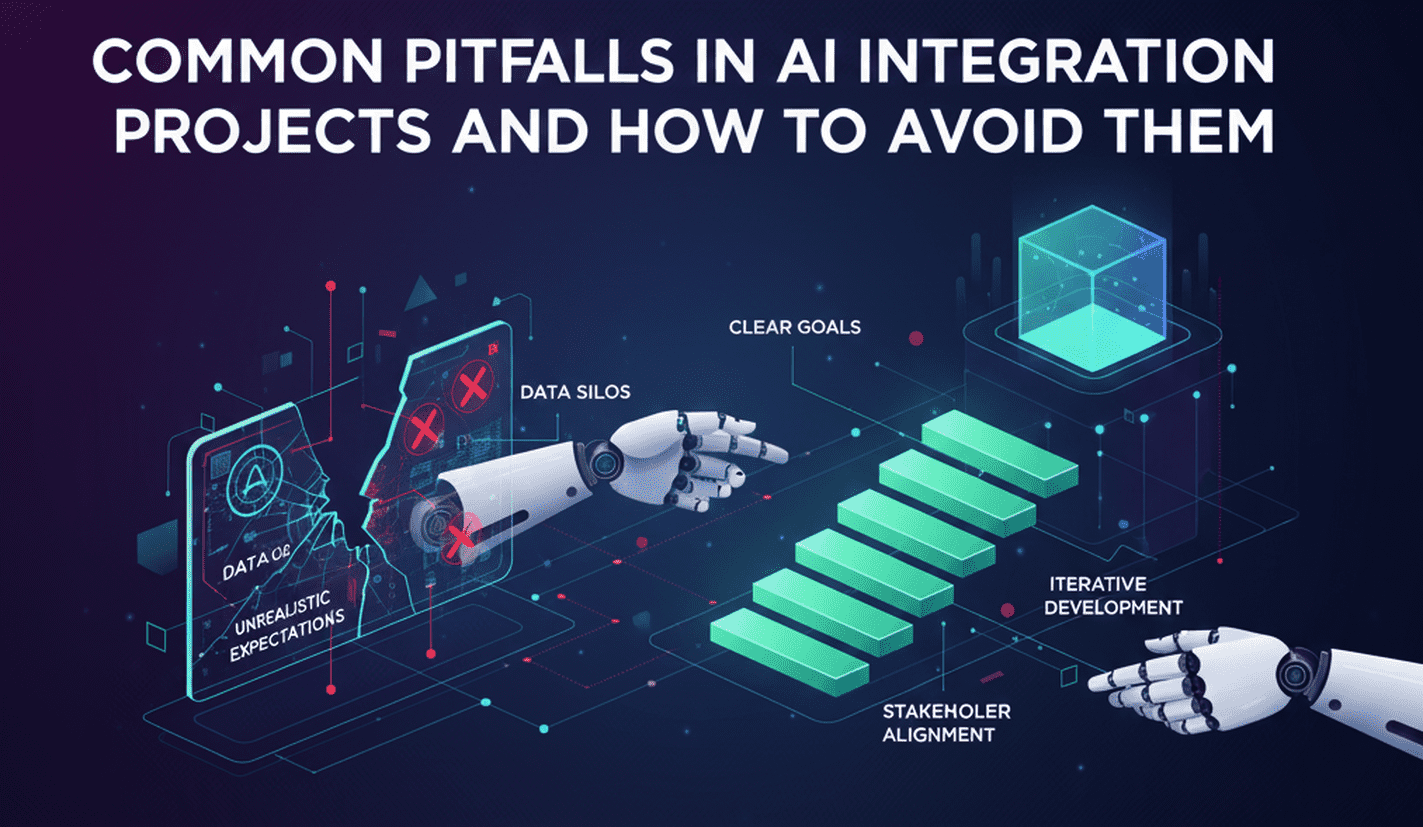

AI integration has become a top strategic priority for enterprises and well funded startups entering 2026. Technology investment patterns are shifting as organizations move past small scale pilots and focus on enterprise grade systems that operate across departments, geographies, and data ecosystems. Leaders want productivity gains, faster decision intelligence, improved automation, and stronger customer experience outcomes. These expectations make Artificial Intelligence Integration Services central to digital transformation roadmaps.

Yet even with rising maturity, many AI programs continue to face recurring challenges. According to recent industry assessments, fewer than half of enterprise AI systems deliver the expected ROI within the first deployment cycle. These gaps rarely arise from model performance alone. They surface when AI intersects with legacy infrastructure, unclear governance, organizational readiness, and fragmented data foundations.

To support leaders planning AI investments in 2026, the following sections highlight frequent pitfalls and practical strategies to avoid them.

1. Misalignment between strategic goals and technical execution

Enterprises often begin integration work with ambitious goals, but the interpretation of those goals shifts once engineering teams begin implementation. If business expectations and technical decisions are not anchored to a shared framework, AI outcomes become inconsistent.

How to avoid this in 2026:

• Build a unified decision blueprint that outlines business priorities, technical dependencies, and measurable success metrics.

• Treat AI as a strategic business asset instead of a technology initiative.

• Conduct roadmap sessions where product, engineering, risk, and operations teams define a shared success narrative.

Using AI Integration Consulting early allows teams to validate assumptions and prevent fragmented execution.

2. Ignoring data readiness issues that slow or derail AI performance

Enterprises frequently assume their data is ready for AI workflows, only to discover missing fields, inconsistent formats, or compliance risks late in the process. This results in slow model training, unreliable predictions, and increased operational costs.

Strong action steps for 2026:

• Perform an end to end audit of data accessibility, quality, lineage, and regulatory compliance.

• Introduce automated data monitoring tools to maintain quality after integration.

• Encourage business units to formalize data ownership and documentation habits.

Studies indicate that data inconsistencies remain one of the top reasons AI initiatives underperform.

3. Viewing integration as a one time event instead of a lifecycle

AI systems shift with changing market conditions, regulations, and customer behavior. Treating integration as a project rather than a lifecycle leads to model degradation, reduced accuracy, and higher operational risks.

2026 best practices:

• Invest in model lifecycle management supported by monitoring, retraining intervals, behavior audits, and performance dashboards.

• Build operational capacity for continuous improvement.

• Work with an AI integration solutions partner that provides maintenance, evaluation, and engineering support.

This lifecycle approach keeps AI responsive, stable, and strategically aligned.

4. Underestimating the challenges of legacy systems

Legacy environments often include outdated architectures, undocumented workflows, and interfaces that were never designed for AI driven operations. These constraints lead to delays, integration failures, or high engineering complexity.

Smart modernization paths for 2026:

• Assess system bottlenecks before integration to avoid hidden risks.

• Use middleware to create interoperability without forcing immediate system replacement.

• Plan modernization in phases, especially for regulated industries where systems anchor critical operations.

Gradual transformation helps enterprises achieve AI outcomes without jeopardizing system stability.

5. Weak governance models that overlook new regulatory requirements

The regulatory landscape for AI is expanding rapidly across North America, Europe, and Asia. Compliance expectations cover transparency, explainability, data rights, model accountability, and security protocols.

Governance essentials for 2026:

• Establish model approval workflows, documentation standards, and audit trails.

• Validate security at every integration point including data ingestion, inference layers, and storage.

• Train leadership on emerging AI policies to avoid unintentional compliance gaps.

A strong governance program ensures responsible scaling and reduces long term legal risks.

6. Collaboration gaps between technical, operational, and executive teams

Global enterprises operate with distributed teams that rely on different tools and priorities. Misalignment across these units creates siloes, slow decisions, and unclear ownership.

Collaboration improvements for 2026:

• Introduce shared dashboards that track progress, risks, and upcoming milestones.

• Designate a cross functional program owner who provides clarity and decision authority.

• Encourage regular milestone reviews with participation from engineering, operations, legal, and executive leadership.

Strong collaboration accelerates timelines and supports predictable delivery.

7. Selecting vendors and tools without systematic evaluation

The AI landscape is expanding, but selecting tools based on marketing claims or limited pilots increases long term risk. Poor compatibility, insufficient security, or complex onboarding can derail enterprise scale deployment.

Vendor evaluation strategy for 2026:

• Validate interoperability with existing systems.

• Review tool maturity, roadmap stability, documentation quality, and support tiers.

• Assess security certifications and compliance alignment.

Enterprises benefit by partnering with an AI integration company that understands multi platform environments and can guide rational vendor selection.

8. Insufficient preparation of workforce roles affected by AI adoption

People must understand new processes, governance requirements, and operational workflows. When training is neglected, adoption becomes slow and inconsistent.

A robust workforce readiness plan includes:

• Hands on training modules for technical and non technical teams.

• Guidance on workflow adjustments and compliance responsibilities.

• Practical examples of AI use cases within each department.

Strong workforce enablement maximizes ROI and reduces operational friction.

9. Continuous experimentation with no measurable business impact

Enterprises sometimes invest heavily in experimentation without connecting outcomes to measurable gains. Without defined ROI metrics, teams continue building proofs of concept without production value.

How to prevent this in 2026:

• Set clear business KPIs before initiating development.

• Track performance improvements, cost savings, and cycle reductions.

• Build a value measurement framework that leadership can reference for budgeting.

Outcome driven experimentation creates accountability and accelerates enterprise adoption.

10. The strategic value of expert guidance

As AI becomes more embedded in global enterprise operations, the demand for advisory strength continues to increase. Companies working with experienced partners in Generative AI Consulting or AI Integration Services gain strategic support, faster delivery, and fewer operational risks. The combination of expertise, governance structure, and engineering capacity enables organizations to scale with confidence.